I’m a software engineer and machine learning practitioner with over 6 years of experience in software engineering and 4+ years in machine and deep learning. I’ve contributed as a developer, researcher, and architect on a variety of academic and enterprise projects, including chat systems, payment and wallet management, e-commerce platforms, distributed computing, computer vision, image processing, and Android applications, serving both B2B and B2C markets.

I’m genuinely passionate about Generative AI, Computer Vision, Deep Learning, AI4SE, SE4AI, and AIOps, and I enjoy exploring complex problems that require mathematical thinking and innovative solutions, such as diffusion models, multimodal LLMs, transformers, and graph neural networks.

I’m currently seeking opportunities as a software engineer (Java/Spring), machine learning engineer, or research-focused role where I can combine my software engineering experience with cutting-edge ML expertise to build impactful, scalable solutions.

I work primarily with Java and Python, and have extensive experience with Spring Framework and PyTorch, along with a working knowledge of other languages and platforms.

daneshvarshayan [at] gmail [dot] com

Computer Science Graduate (M.Sc.) from University of Manitoba.

Education

MSc in Computer Science - ML4SE (Focused on Deep Learning, Computer Vision, and Data-driven Software Engineering)

University of Manitoba (Jan 2023 – Apr 2025, Winnipeg, Canada)

GPA: 4.5/4.5 - A+

Thesis: Representation-level Augmentation and RAG-enhanced Vulnerability Augmentation with LLMs for Vulnerability Detection

K. N. Toosi University of Technology (Sep 2018 – July 2022, Tehran, Iran)

GPA: 19.21/20 - (US CGPA: 4/4 - A+)

Thesis: Reflection Removal of In-vehicle Images (19.5/20) - Supervisor: Dr. Behrooz Nasihatkon

Experience

Please see my LinkedIn page to see the details of roles I held in the past. Also, my CV contains a summarized version.Publication

- [J1] The application of barcode readable assay and linear regression RGB analysis using a customized smartphone app in on-chip electromembrane extraction for simultaneous determination of heavy metal ions Neda Rezaei, Seyyed Shayan Daneshvar, Behrooz Nasihatkon, Shahram Seidi, Maryam Rezazadeh. 2024. (Microchemical Journal, Q1, IF: 5.4)

- [J2] VulScribeR: Exploring RAG-based Vulnerability Augmentation with LLMs Seyed Shayan Daneshvar, Yu Nong, Xu Yang, Shaowei Wang, Haipeng Cai. 2025. (TOSEM journal, Q1, IF: 7.0)

- [C1] A Study on Mixup-Inspired Augmentation Methods for Software Vulnerability Detection Seyed Shayan Daneshvar, Da Tan, Shaowei Wang, Carson Leung. 2025. (Accepted at EASE 2025, CORE A Conference)

- GUI Element Detection Using SOTA YOLO Deep Learning Models Seyed Shayan Daneshvar, Shaowei Wang. 2024. (ArXiv pre-print document)

Projects

Over the past years I have done various projects in different fields ranging from Enterprise Web Development & Software Engineering to Machine/Deep Learning & Computer Vision.

Here are some of those projects:

(I try to update the list and add the previous ones, this list is incomplete at the time!)

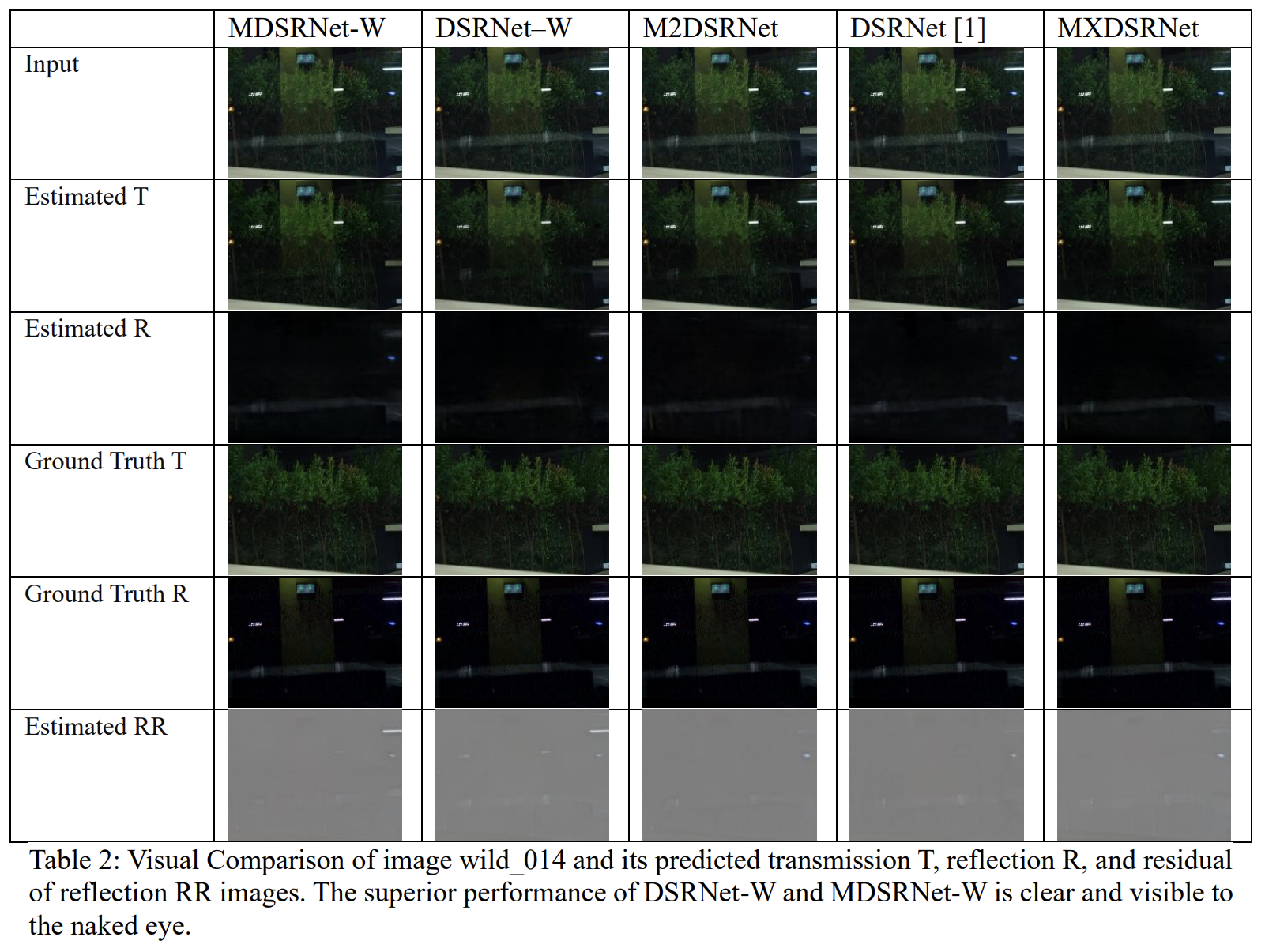

In this project, I replicated the results of the best SOTA Single Image Reflection Removal model (DSRNet), created an image-based version of a SOTA state-space model (Mamba/S6),

and replaced attention-based modules of DSRNet with Mamba modules to improve the performance of the model.

I also investigated the effect of the Cosine Annealing learning rate schedule and AdamW's weight decay.

In this project, I used several latest YOLO object detection models to train and test on a new GUI element detection dataset.

I asked and answered 3 new and unique research questions related to gui element detection and performance of different models in terms of [email protected] with an IoU > .5.

I will be submitting this project as a paper to a journal or conference in late May, and I will provide more information here, when it is accepted!

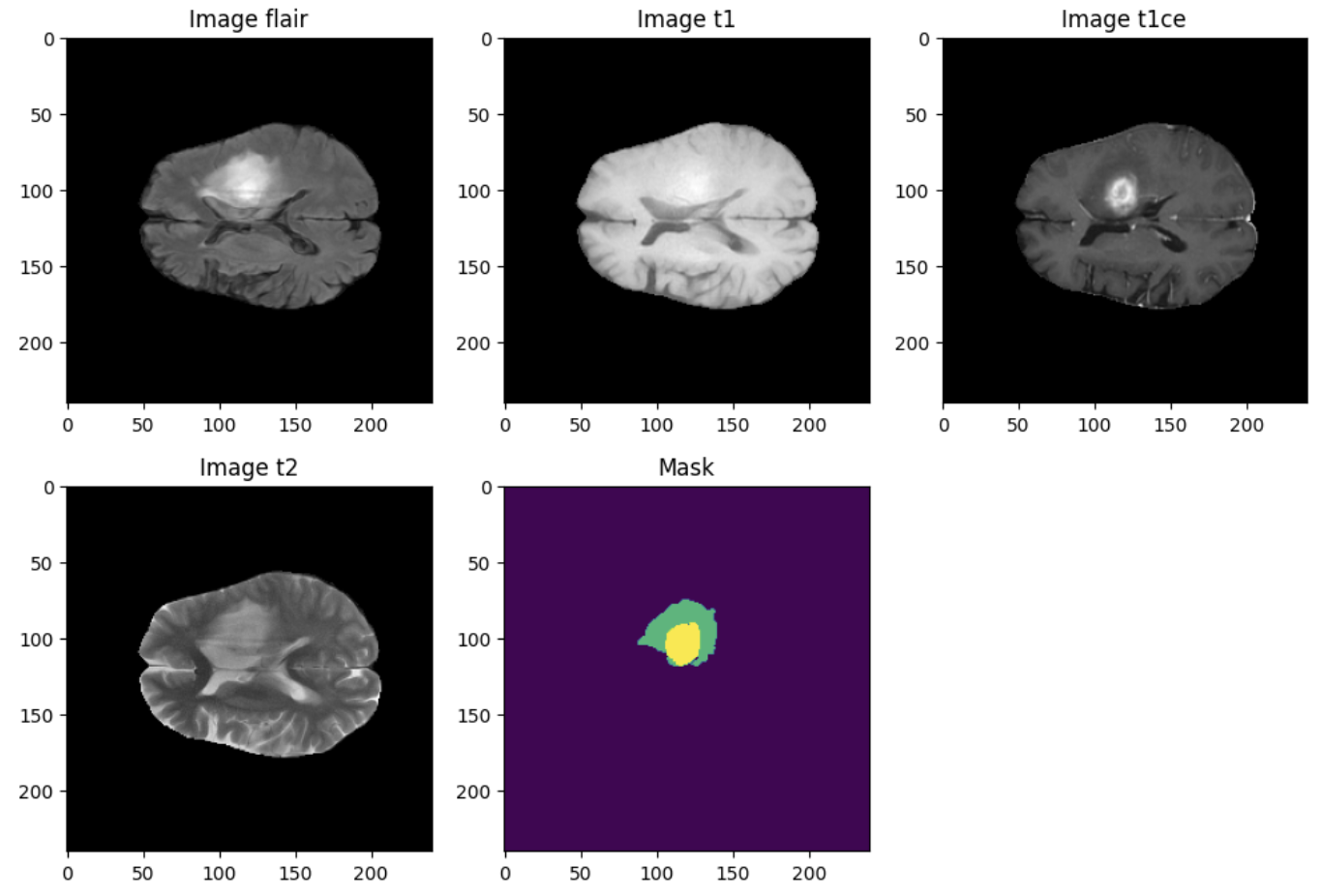

3D Segmentation of Brain Tumors on BraTS2020 dataset. I created, trained, and tested 3 variants of 3D UNet, namely Vanila 3D UNet, Residual 3D UNet, and 3D UNet with a custom Attenion mechanism with Dice and BCE+Dice losses.

I also helped my colleague use the segmentation results to train an FCN to predict survival rates.

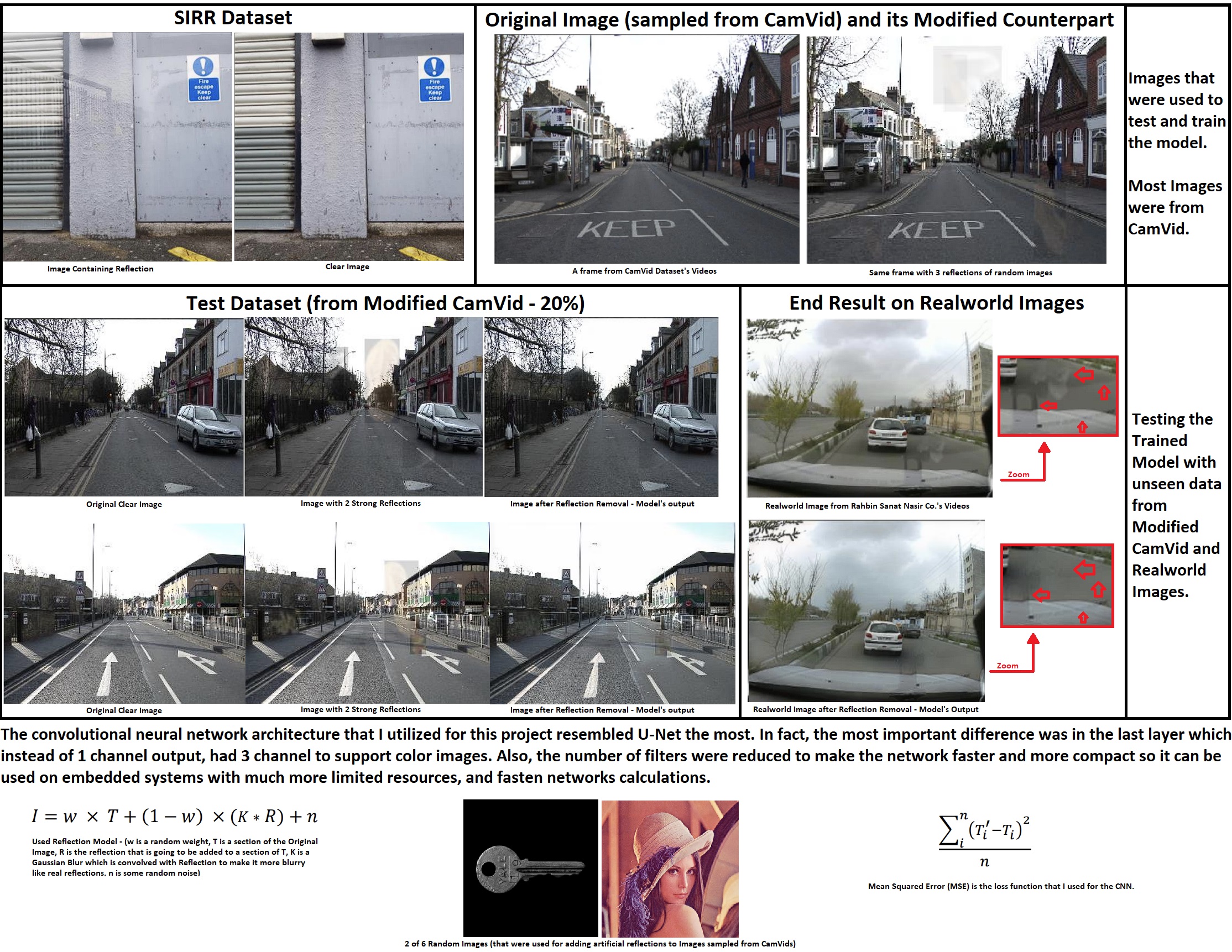

In this project, I had to build and train a deep learning model that could remove or decrease the

effect of the reflections of in-vehicle images in a way that the end result would look natural.

First, I used a dataset of real-world streets and road images (from CamVid dataset) and synthesized

a new dataset with reflections of various objects. Then, I came up with a CNN that

resembled the original U-Net neural network but with 3-channels for each pixel in the output.

Finally, I trained various similar networks with different depths and kernel sizes to get to the

final solution which could remove most reflections and restore the original image section behind

the reflection.

Take a look at the

repository of this project to see the abstract, and the thesis in persian (English abstract

available).

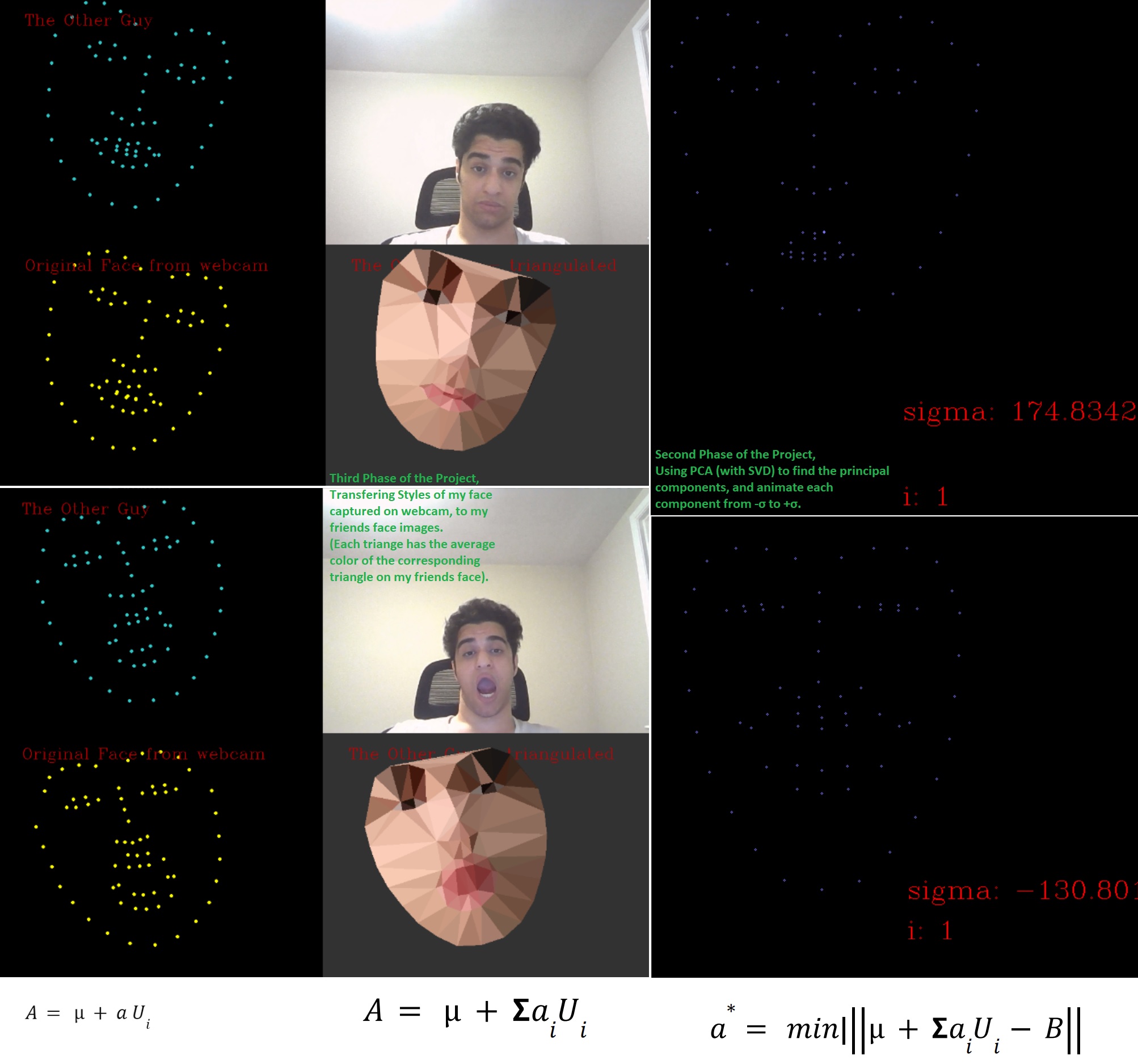

In this project, my friend and I had to take various pictures of our selves, find the face landmarks

with dlib library, and calculate the average face. Then, we had to register the faces to the

average. We had to transfer the photos using an affine and similarity matrix transform.

In the second phase, we calculated the principal components (PCA) using Singular Value Decomposition

(SVD), and found the top 10 principal components and then animated the top 10 modes.

In the third phase, we had to transfer webcam image's gesture to each other's face model (By finding

the optimum a via Least Square), and display it.

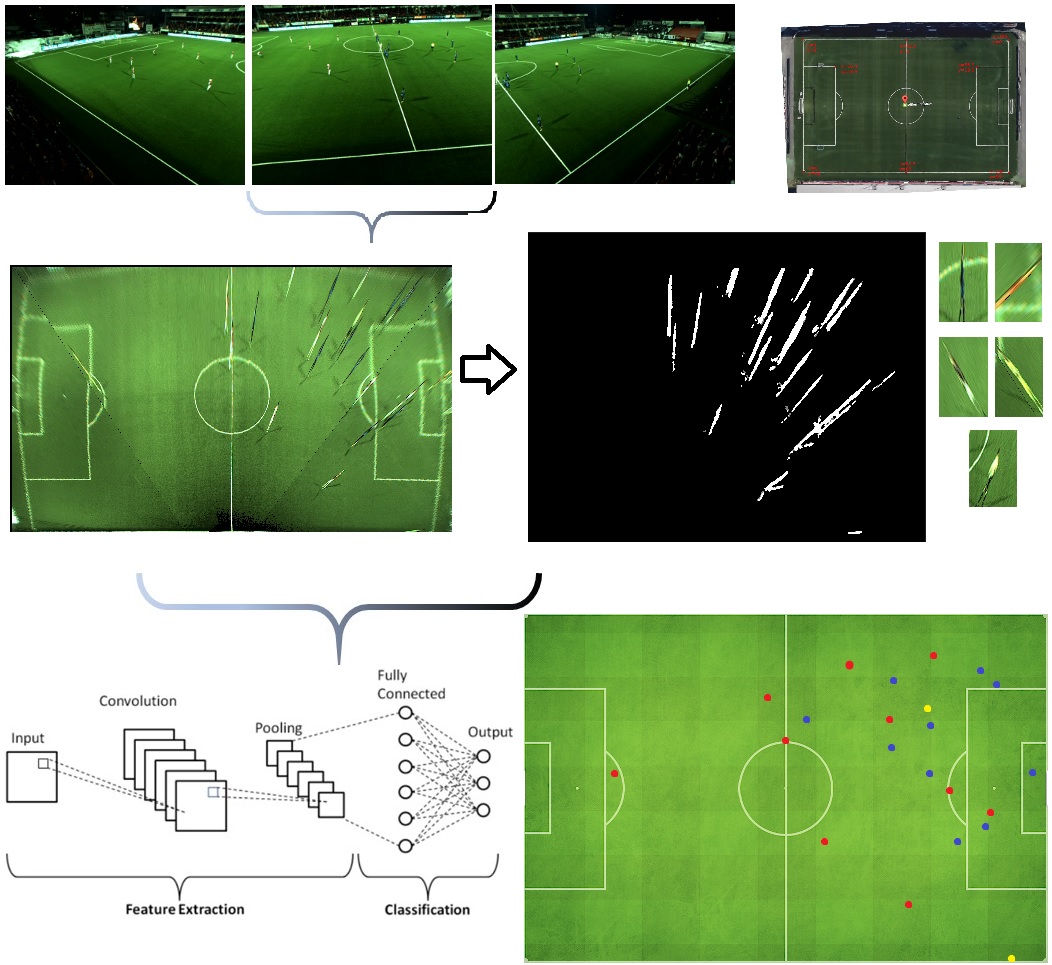

In this project, I had to detect, and classify soccer players and the referees, and finally draw

them with 3 colors on an artificial soccer field.

In order to detect soccer players, I used KNN background subtraction algorithm alongside basic

morphology techniques and the connected component algorithm. In order to classify them, I created a

dataset of players and referees and trained a simple Convolutional Neural Network (CNN).

The final model reached an accuracy of over 98%. The whole project was fast enough to detect,

classify, and visualize players and referees in a smooth fashion (10 fps on a mid-range laptop).

I also used a transform to get the exact location on a birds-eye view field. (I had to connect

footage of three video cameras, and I chose to use a transform instead of creating a panorama image)

In order to make calculations faster, I tried to use motion estimation algorithms, like optical

flow, and skip a few frame on the original video, but it was not very successful. (So, the final

code repository does not contain the code of this part!)

In this project, I had to find an interesting problem and solve it with Genetic Algorithm, Genetic

Programming, and a Multi Objective Optimization Algorithm without using any Genetic or Optimization

Algorithm libraries. I chose Text Summarization problem as I had heard about it in a previous course

(Fundamentals of Speech and Natural Language Processing), and chose NSGA-II as the second algorithm.

I implemented all three algorithms in Java utilizing OOP, and used two criteria for optimization

which were less similar sentences, and higher sentence scores.

The results for NSGA-II were very promising that the course instructor offered me a research

assistant opportunity to optimize it a little more, and publish it as a new fast genetic method for

text summarization. (Sadly, I couldn't accept as I was busy working as a research assistant,

teaching assistant, and software developer at the same time!)

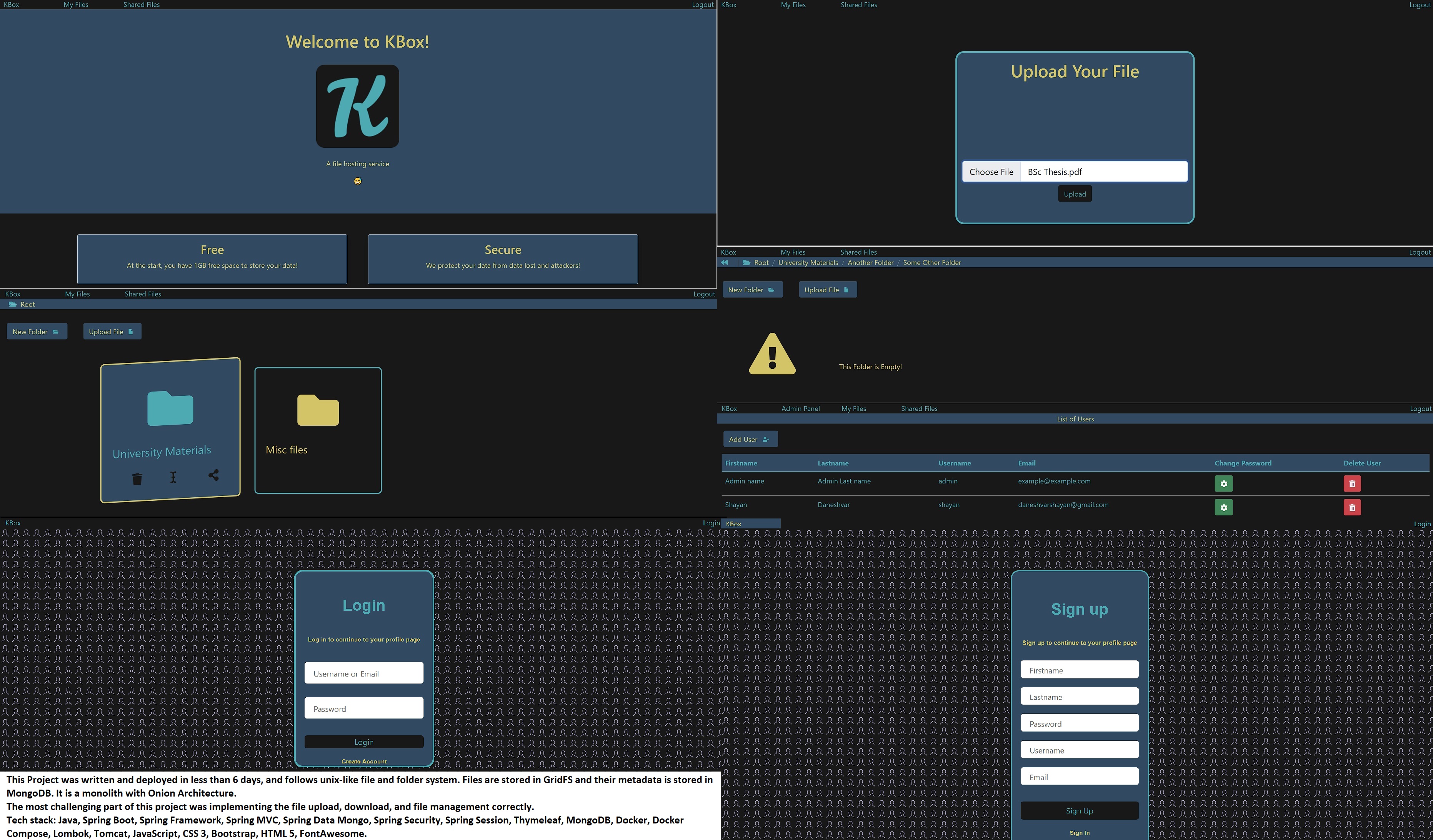

In this project, I developed a full monolith website with Spring Framework, Spring Data, Spring Security, Java, MongoDB, MongoDB GridFS, JS, CSS, HTML, and Thymeleaf. You could upload and download your files anytime you wanted, and you could share the files with another person via email or shared its link. It was deployed and was available at kbox.shayandaneshvar.ir for over 2 months. It can be deployed easily to production anytime.

This project is basically a website where people could take tests in various subjects, mostly in

Software Engineering, and find out which topics they need to learn better.

Also, the admins could upload videos with different titles in various topics (Both free and paid),

where users could pay to watch a video and strengthen their skills.

I was responsible for the design, development, and deployment of the backend services, and one of my

friends was responsible for UI/UX design and development of the website.

Sadly, no sponsors were found and the project got cancelled. (So, if you are interested in the

source code, just give me an email.) Services that got completed or reached the MVP: Video Service,

Auth Service, and Quiz Service.

Tech Stack:

Backend:

Service Oriented Architecture, Spring Boot, Spring MVC, Spring Data(JPA, Mongo), Spring Cloud,

Spring Security( JWT, OAuth2 ), Spring Mail, Spring AOP, Hibernate, Docker, Docker-Compose, Nginx,

MongoDB, Postgresql, H2, Lombok, MapStruct, JUnit5, Mockito, Maven.

Frontend:

JS/TS, React.js, Next.js, Tailwind, Material UI, SWR, Redux, PM2, VideoJS, NodeJS, Yarn, NPM.

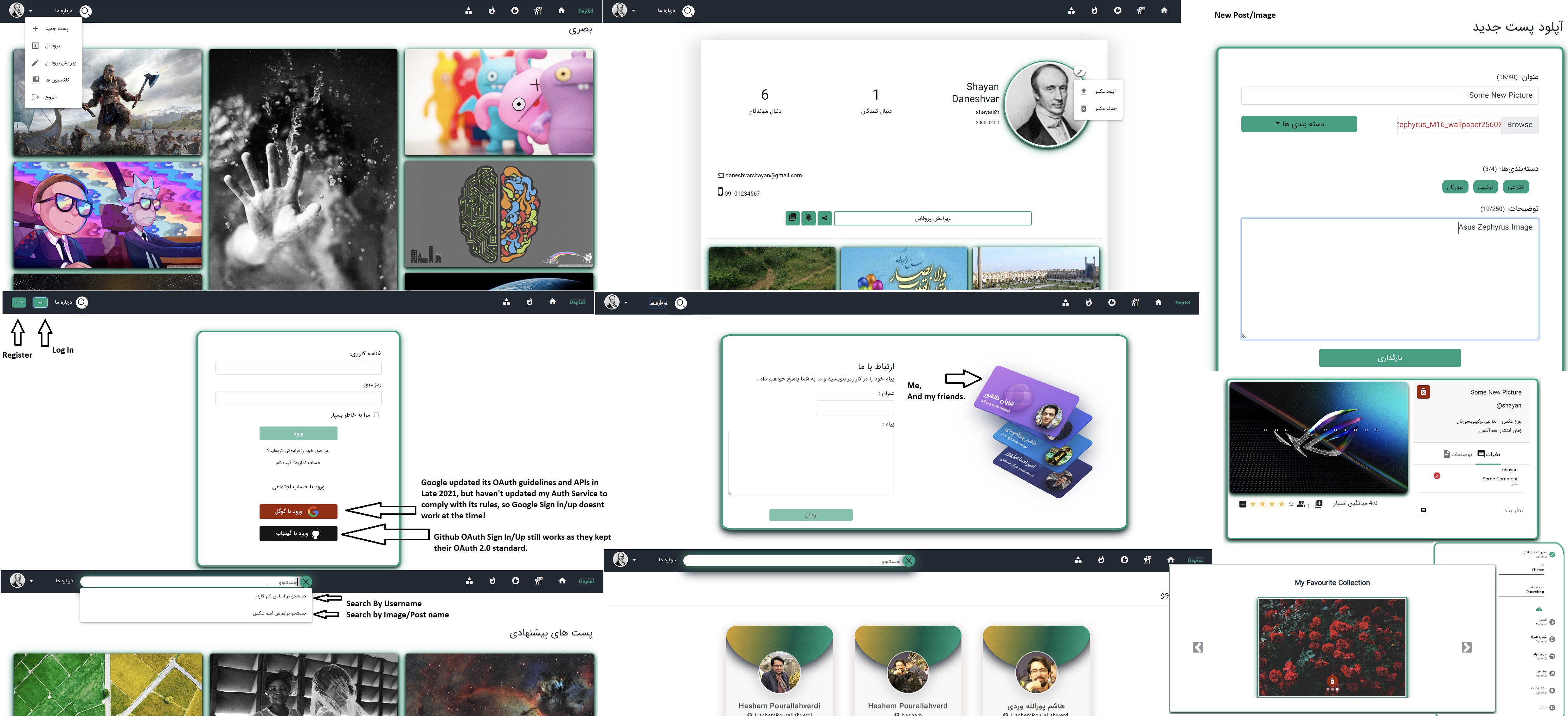

In this project, we could build any sort of software we wanted. Hence, three other people and I

decided to build a photo sharing sort of website.

I was responsible for the backend design, development, and deployment.

I also helped one of my friends develop two recommender systems for the website (A Categorical

Filtering Recommender and A Collaborative Filtering Recommender).

I also helped my frontend developer friends use Angular's Auth Guard and save JWT on user's local

storage, and developed the login page.

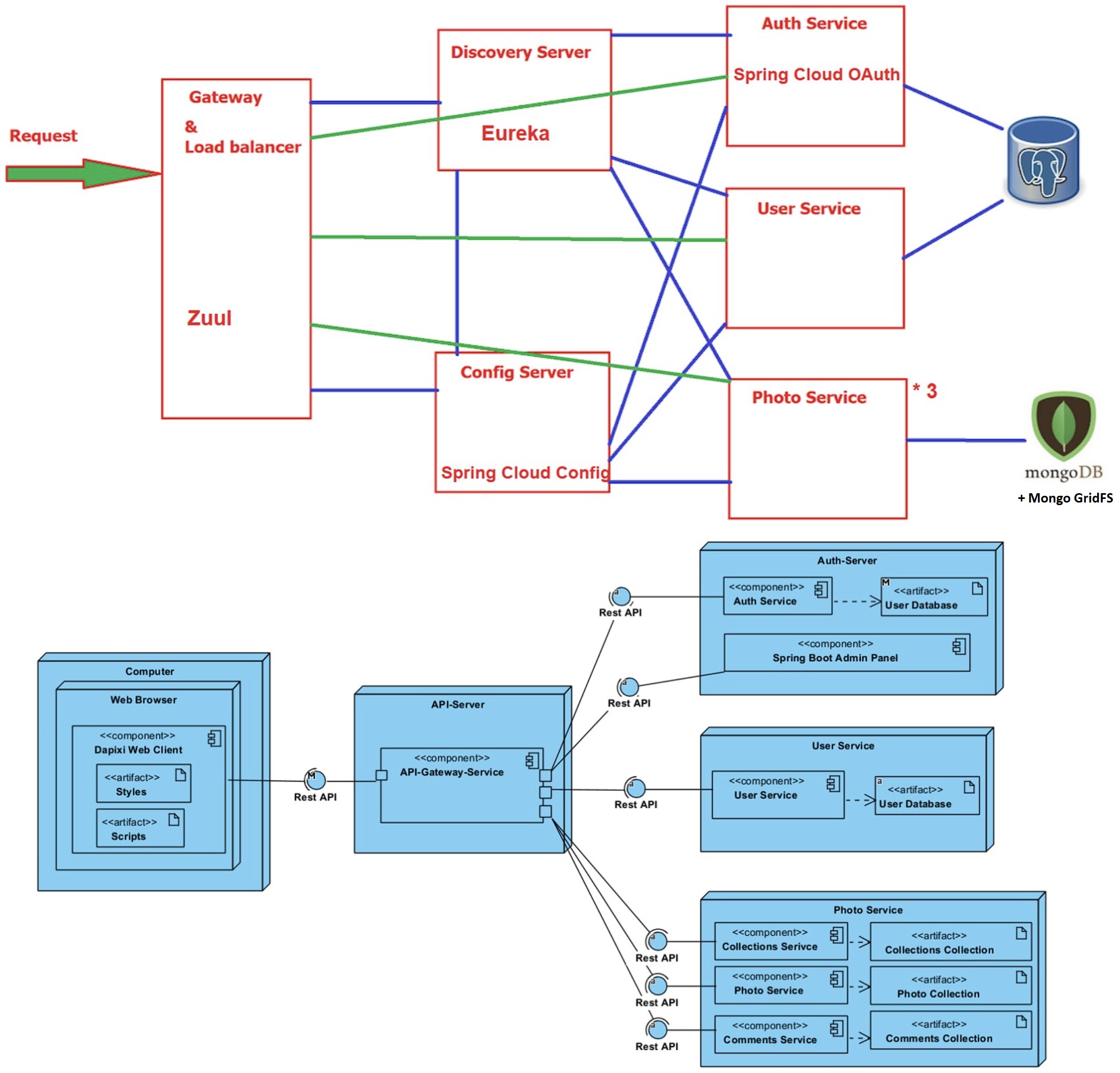

The project has a Microservices Architecture and is consisted of the following services: Auth(N/Z)

and OAuth Service, User Service, Service Discovery Server, Gateway Service (Zuul v1), Photo Service,

and an Externalized Configuration service.

The backend code is over 12K Lines of Java code alongside over 2K lines of configurations,

dockerfiles, etc.

Technologies:

Frontend: Html, CSS, SASS, JS, TS, Angular 10, Angular Material, Bootstrap

Backend: Microservices Architecture, Java, Spring Boot, Spring MVC, Spring Data JPA, Spring Data

Mongo, MongoDB, H2, Postgres, Docker, Maven, Spring Cloud (Eureka Discovery, Config Server, Zuul

Gateway, Hystrix Circuit Breaker, Hystrix & Turbine Dashboard, Code Centric's Admin Panel,

OpenFeign, Spring Cloud Security (OAuth2)), Spring Security OAuth2 Client, JWT, Spring Mail,

Mockito, JUnit5, AssertJ, Swagger/OpenAPI 3, Rest API with Jackson.

Recommender System: Python 3, Sci-kit Learn, Pandas, Numpy.

Deployment: Nginx, Docker-Compose, Ubuntu 18.04

The website is still up and running, but a little slow as it is deployed on very cheap slow

servers in Iran! (Over 2 Years Now!)

Source Code is private due to some important credentials, like email access, database

passwords, Oauth tokens, etc. But, I am willing to share other parts. Send an Email, if you are

interested!

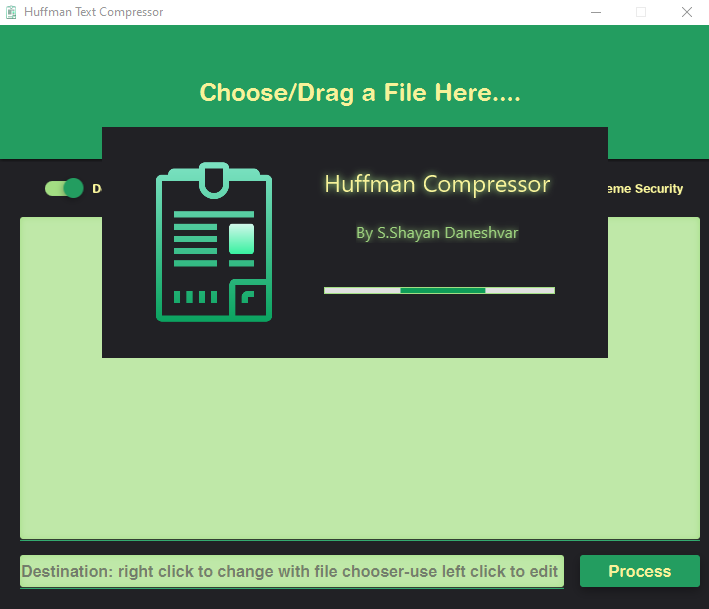

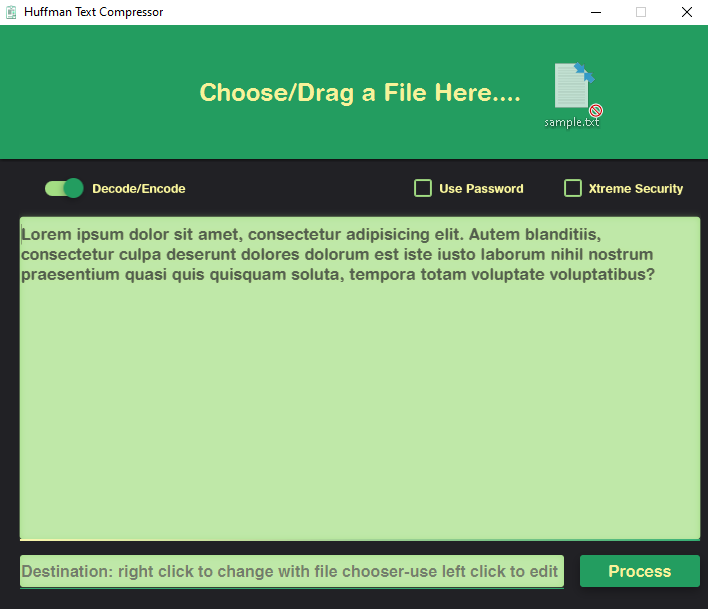

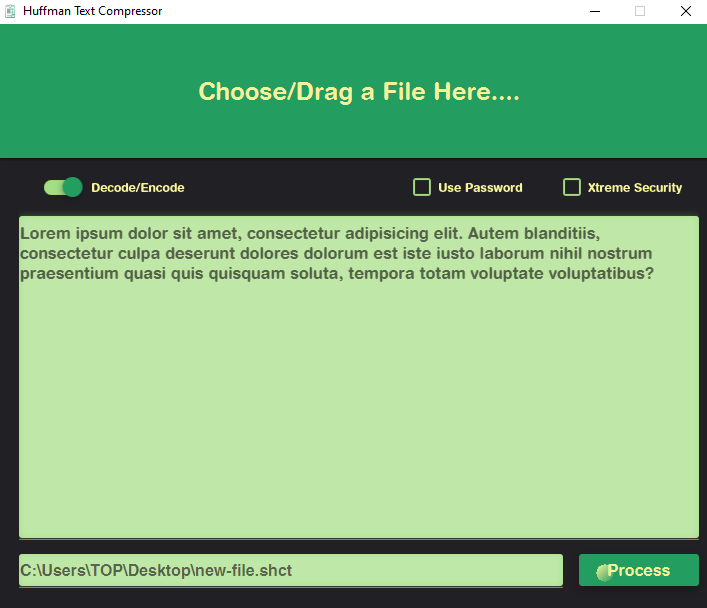

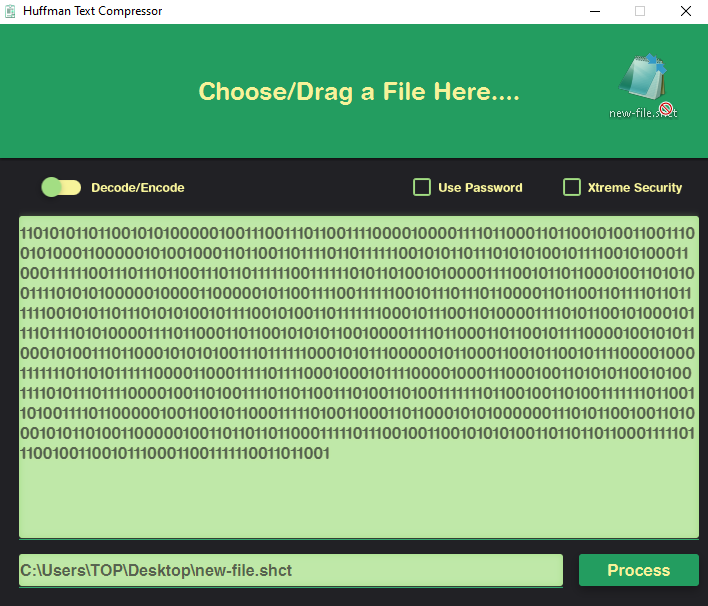

In this project, I implemented the Huffman Compression Algorithm and Tree Data Structure from scratch and used Random Access File in Java to create a text compression software with an optional lock.

Note: I haven't included most of my project here (e.g. various Algorithm Design course projects, Cepstrum Transform impl in Matlab, HTTP server impl, Buddy Memory Management Algorithm impl, etc.), feel free to take a look at my GitHub where over 90% of the projects that I did are available. (But mostly without a documentation or a README!)